A Voice for Bill

This article appeared in the Winter, 1979 edition of onComputing Magazine. Text and photos by Mark Dahmke. I added the first photo which was taken by Brian Lanker in October, 1979 for an article that appeared in LIFE Magazine. Permission was granted by Brian Lanker to use this photo.

Also see the following related articles:

Bill Rush rolled up to the front desk and began to spell out his request on the language board mounted on his motorized wheelchair. “I would like a Selleck Quad T-shirt please. The money is in the bag on the back of my chair.” I happened to be walking by the desk and paused to see what the desk worker’s reaction would be. After a few moments and several false starts, the interchange was underway. I moved closer to the desk to get a better view of Bill’s spelling board. He spelled, “I bet you didn’t notice that the name ‘Selleck Quad’ has a double meaning.” (Bill and I live in the Selleck Quadrangle dormitory at the University of Nebraska.) At this point, I became very interested. “What’s the other meaning, Bill?” He looked up and smiled. “Quad means Quadrangle, but it also means Quadriplegic.”

The word “Quadriplegic” was hard for me to follow. “Wait a minute — let me get a pencil. Now spell it again Bill.” “Q-U-A-D-R-I-P-L-E-G-I-C” he continued spelling. “I am the other Selleck Quad.” “Oh yes, of course,” I responded, taking a moment to catch the pun.

In spite of the fact that Bill has cerebral palsy with quadriplegia and no speech, he is the same as you or me: just a guy going to college. He is a journalism major and a published author. My first encounters with Bill were in Selleck, the dorm where we both live for nine months out of the year.

I would occasionally meet him coming out the door and would hold it open, or stop to help him if his wheelchair got stuck somewhere. It seems that there are always people ready to assist him — out of common courtesy, never pity. Bill uses a headstick (a long stick attached to a headband) and a language board to communicate. He also uses a Selectric typewriter to do his homework and write articles. He has a set of environmental controls in his room which allow him to turn many electrical devices on and off by remote control. Selleck Quadrangle, as well as most buildings on campus, is set up to be accessible to persons in wheelchairs, so he does not have much trouble getting around. Yet there are still many barriers in Bill’s way, communication being the primary one. Although he can type and use the language board, it is not the same as talking. Bill once asked, “Ever try to spell things in a dimly lit bar?” The secondary problem is speed. Bill types about as fast as a single-finger, hunt-and-peck typist. At best this means one or two characters per second, so even a simple “Hello” can take an entire minute. Every word (except those on his board) must be meticulously spelled out. Anything complicated can take five minutes to spell and be understood. Unfortunately, most people have trouble retaining a whole phrase or sentence. By the time the end of the sentence is reached, the first part is forgotten in the effort to concentrate on the individual words.

The World of Computers Invades

The first time that I talked with Bill was at a conference on computing at the University. He became interested in computers while taking a FORTRAN course as an elective. I found him investigating an Apple II that was on display. He was trying to ask the owner if the Apple had a shift-lock key. To most of us, a shift-lock key is not a very noteworthy or important feature, but for Bill it is a necessity.

I stopped to say hello. ‘ ‘Bill, we haven’t met, but I live in Selleck and I’ve seen you around a lot.” He nodded, then began to spell. “Does the Apple have a shift-lock?” He asked me because the owner of the Apple was busy demonstrating a graphics program to another group of people. I replied, “No, but we could easily wire one in if you want to get an Apple.” Then I decided to tell him the big news.

“Bill, I’m glad we finally met. I had been planning to come talk to you. I didn’t want to say anything until we were sure, but with the help of Dr Lois Schwab (director of Independent Living at the University of Nebraska at Lincoln), we think that we finally have financial support to build a voice synthesizer computer for you.” Bill became very excited. I went on to explain how the project had started. Back in September I met Dr Schwab, almost by accident. I work in Academic Computing Services on campus. We went out to her department to discuss a grant proposal for academic computing. After the initial grant business, we found ourselves talking about computers, prosthetics, and voice synthesizers. I told her that back in high school I had once planned to build a simple voice synthesizer for a science fair project. Dr Schwab suggested that we look into the possibility of a pilot project. Almost simultaneously, we thought of Bill. Within hours, Dr Schwab contacted the University

Affirmative Action office and other agencies, and obtained a commitment of support. Part of the funding came from the Nebraska Division of Rehabilitation Services, since Bill is one of their clients. Another part will come from United Cerebral Palsy of Nebraska, and the rest from the University Affirmative Action office. Dr Schwab has been interested in microprocessor applications for the severely disabled since 1975, when she began negotiations with the Vocal Interface Division of the Federal Screw Works of Michigan (the leading manufacturer of voice synthesis hardware at that time). They had just released an $11,000 communications machine. Negotiations fell through because Votrax said it would take $250,000 and two years to complete the system she had in mind.

Designing the System

Everything happened so rapidly that I had not had time to think. Suddenly, someone was funding the project I had dreamed about since 1973. It was never completed then, because of the expense of the hardware. Things would be easier now that someone else was paying for the synthesizer, and I already owned an 8080-based computer that could be used to write the software. After purchasing the hardware and estimating the development costs, we discovered that we could put the proposed system together for around $3,000. Developing the dictionary handler program took up most of my free time for several months. The result was 2500 lines of assembler language that comprise a dictionary based vocabulary management system (VMS). The vocabulary management system has many features built into it. I wanted Bill to have something that would be sophisticated and capable of being customized and altered as needed, without having to call in a programmer to modify it. I wrote it in assembler language because BASIC is far too slow for this application. Searching a dictionary that could eventually contain 500 or more words and phrases is a time-consuming — even for a fast computer.

Modes of Operation

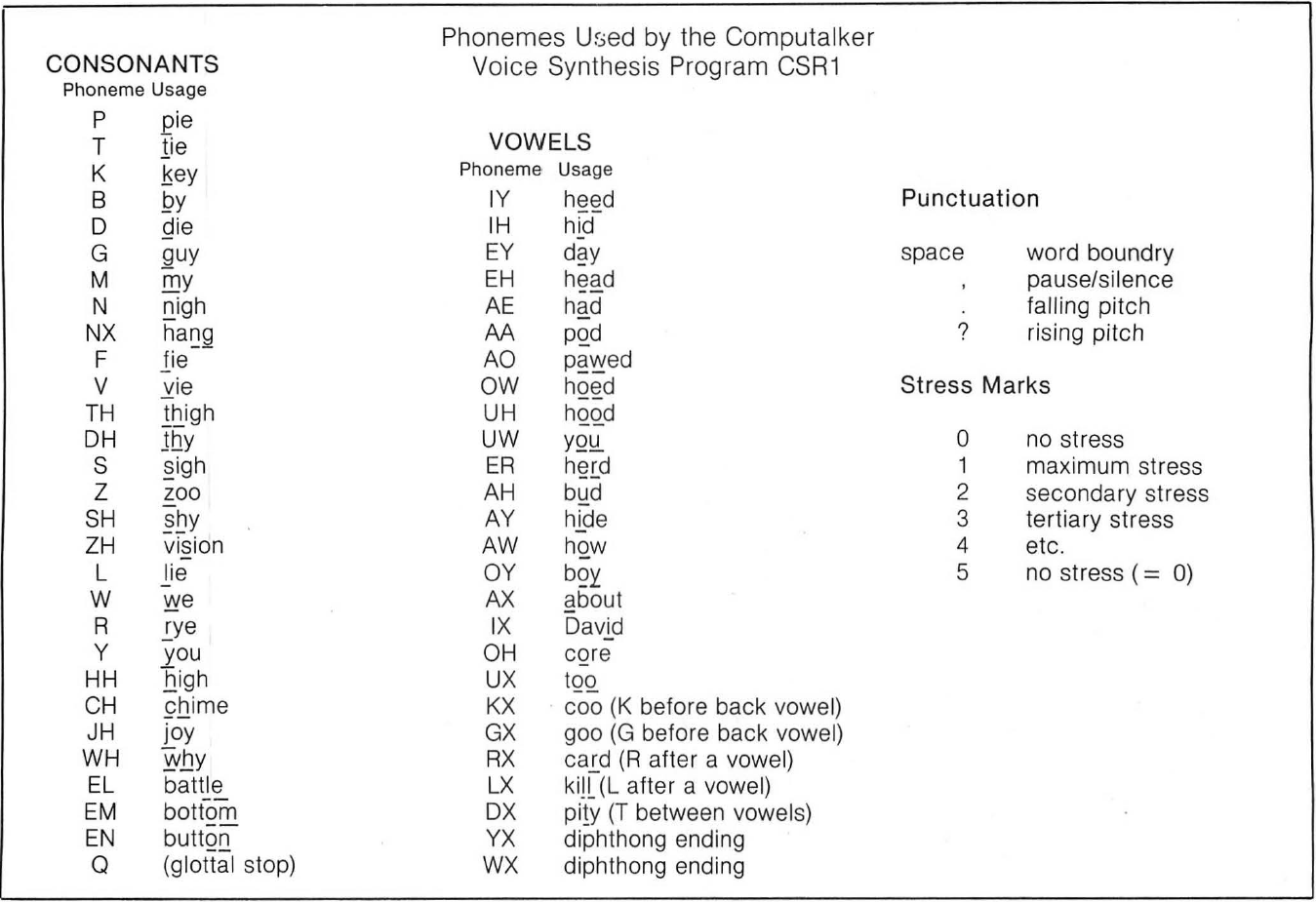

In 1976, Computalker Consultants of Santa Monica, California introduced a speech synthesizer for S-100 bus computers. The Computalker hardware consists of a single S-100 circuit board containing a series of oscillators and filters that can be directed to generate sounds that are produced by the human larynx and mouth. The board is an electronic simulation of the mechanics of human speech. A series of output ports (ie: locations on the computer enabling it to exchange information with the outside world) are used to send commands to the individual circuits on the board to change the voice pitch, amplitude, resonance, aspiration, and nasal quality. The board currently retails for approximately $400, but is similar in performance to the considerably more expensive ($11,000) Votrax speech synthesizer. Computalker also provides software support for the synthesizer, including parameter editing programs (for direct manipulation of the control voltages), and a synthesis by rule program called CSR1. CSR1 allows the user to type in groups of phonemes (the building blocks of spoken words) to make up words, phrases, and sentences (see text box). Most of the time Bill will want to type in English words and not bother with the phonetic spellings required by the Computalker CSR1 program. The purpose of the dictionary of words is to provide a way to store and find phonetic spellings.

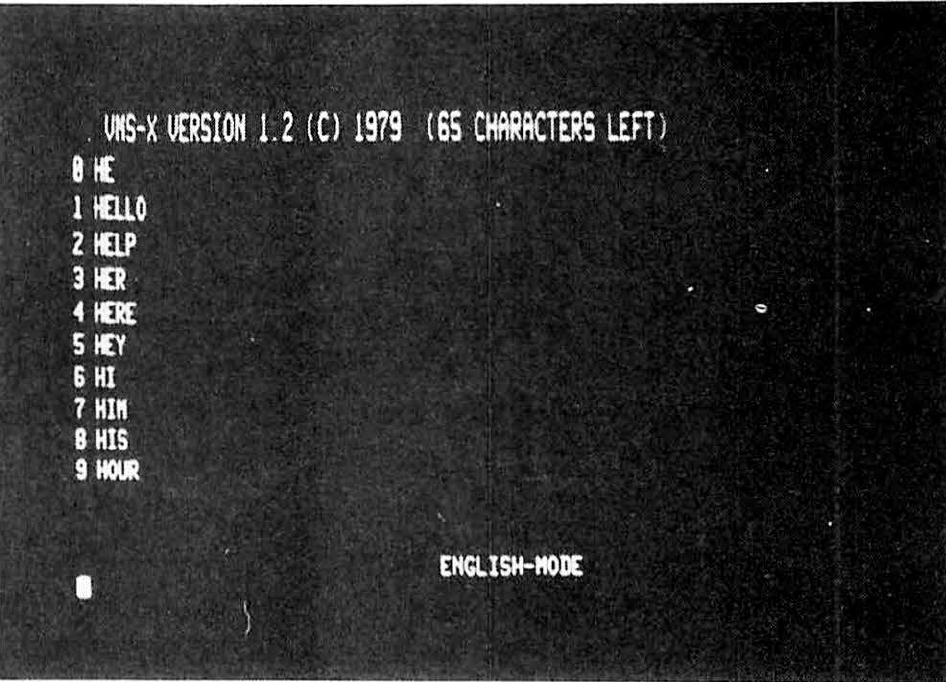

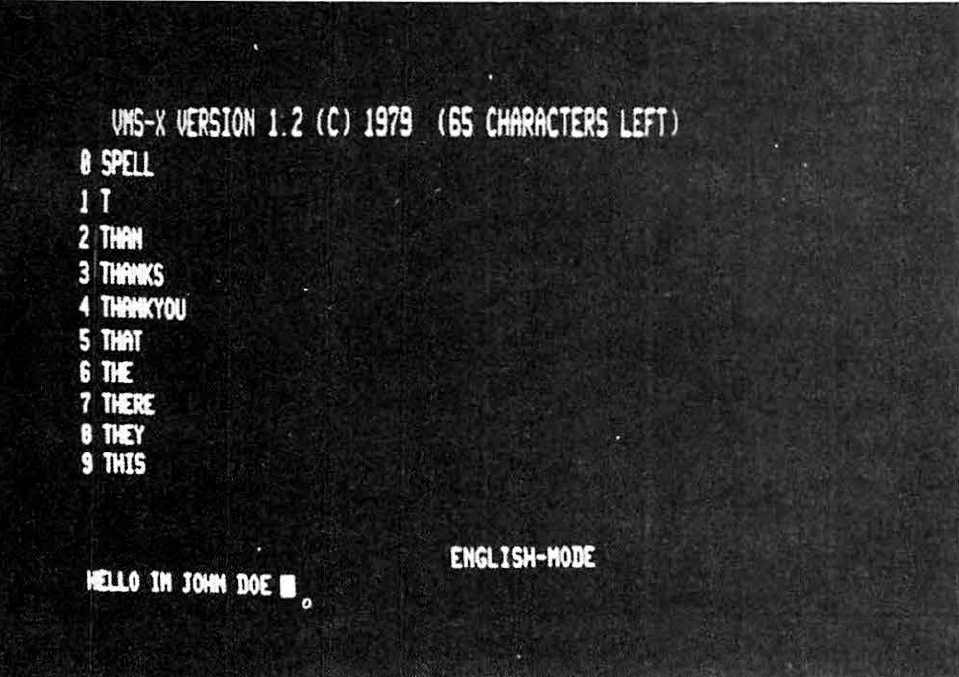

Photo 2 shows the video display as it will be set up for Bill. The top line is a title and version number stamp — mainly for version identification. The number to the right indicates the number of characters or bytes of storage left in the dictionary space in memory. The lines numbered zero to nine are display lines that act as a “window” into the dictionary. The next two lines are blank in the photograph, but are used for error messages, prompt messages, and so on. The next line is blank, except for the words “ENGLISH MODE”. This field is updated whenever the mode is changed from ENGLISH to PHONETIC or NUMBER-LETTER.

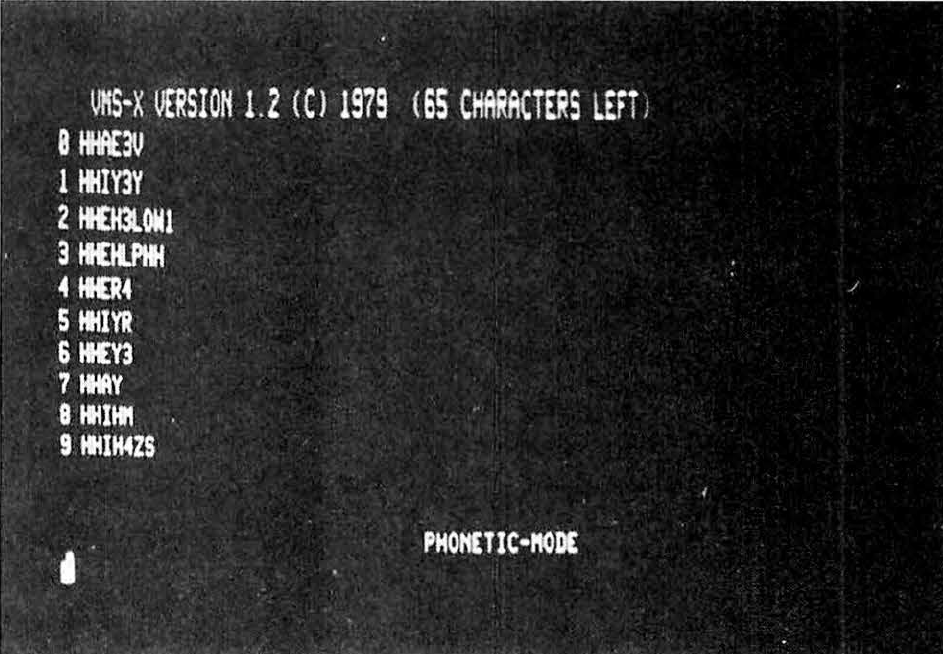

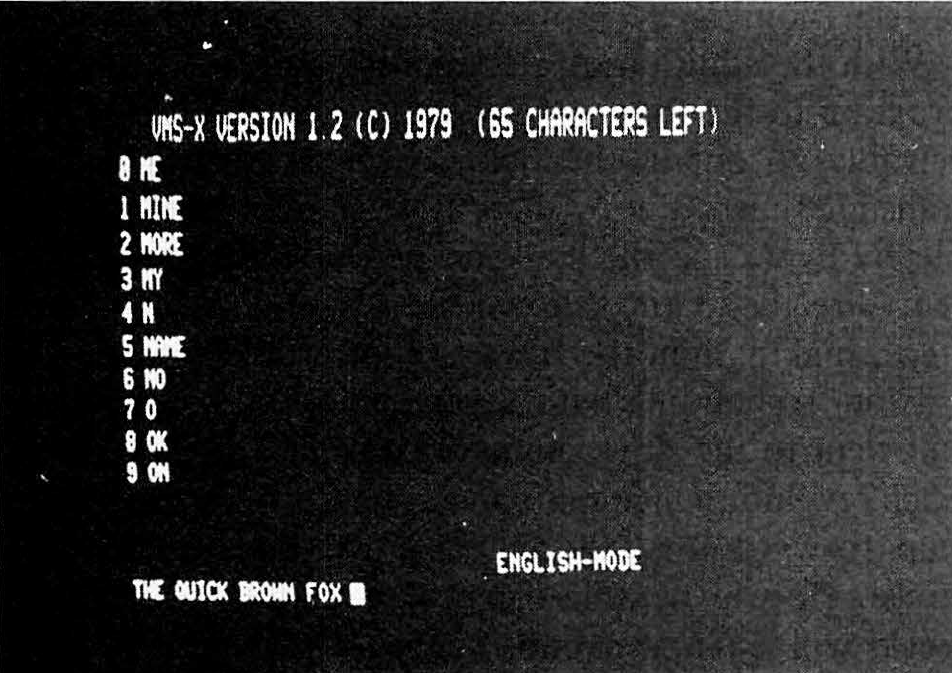

Photo 3 shows what happens when the mode is switched to PHONETIC. The ten lines of dictionary display now show the phonetic portion of each dictionary entry. Line 0 contains the phonetic spelling of HAVE, line 1 contains the word HE, and so on. Note that line 1 in photo 3 corresponds to line 0 in photo 2. Photo 4 shows a sample sentence typed in the text entry area of the screen (the bottom two lines). Text will be automatically “wrapped around” to the next line. Up to 128 characters may be entered at one time in this way.

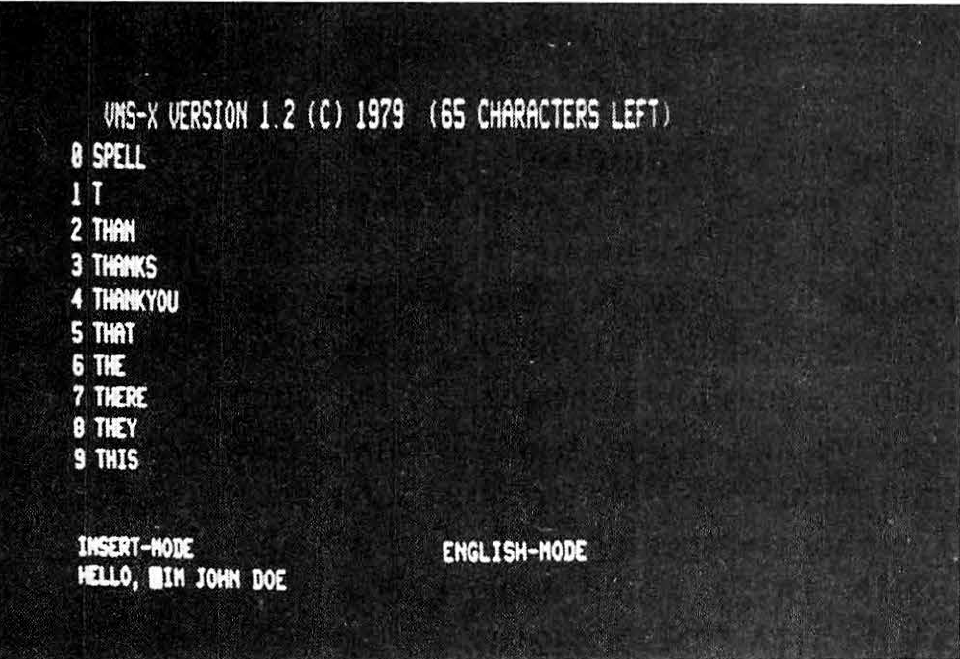

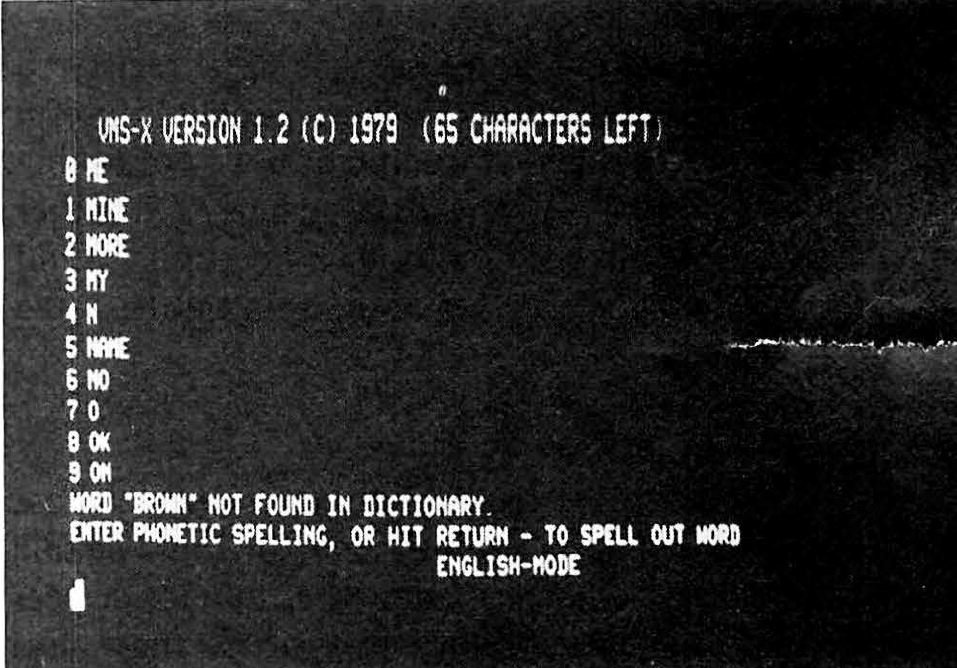

When a sentence such as “HELLO 1M JOHN DOE” is entered, and RETURN is depressed, the program will separate the words, search the dictionary for each word, replace the English word with its phonetic equivalent, call the Computalker program “CSR1,” and vocalize the sentence in one continuous stream. All punctuation including periods, commas, question marks, and spaces will be left as is in the sentence. Suppose, in the above example, I discovered, after typing in the sentence, that I really wanted a comma after the word “HELLO.” The program emulates an intelligent terminal, with some very sophisticated editing features. The text may be corrected before it ever gets to the search and decode stage. In photo 5 a comma has been inserted using the insert mode function. In the insert mode, characters are inserted, and existing characters are moved over from wherever the cursor (white box) is. In the next example, the phrase “THE QUICK BROWN FOX” has been entered (photo 6). When RETURN is depressed, the program begins to search the dictionary for each of the words. Photo 7 shows the response: “WORD “BROWN” NOT FOUND IN DICTIONARY.” The vocabulary management system then prompts the user to give a response. If a phonetic spelling is entered, the response will be added to the in-memory dictionary, along with the English spelling of the word, and the sentence will be completed and vocalized. If the user decides not to enter the phonetic spelling and merely hits RETURN, the sentence will be vocalized and the missing word spelled out letter by letter.

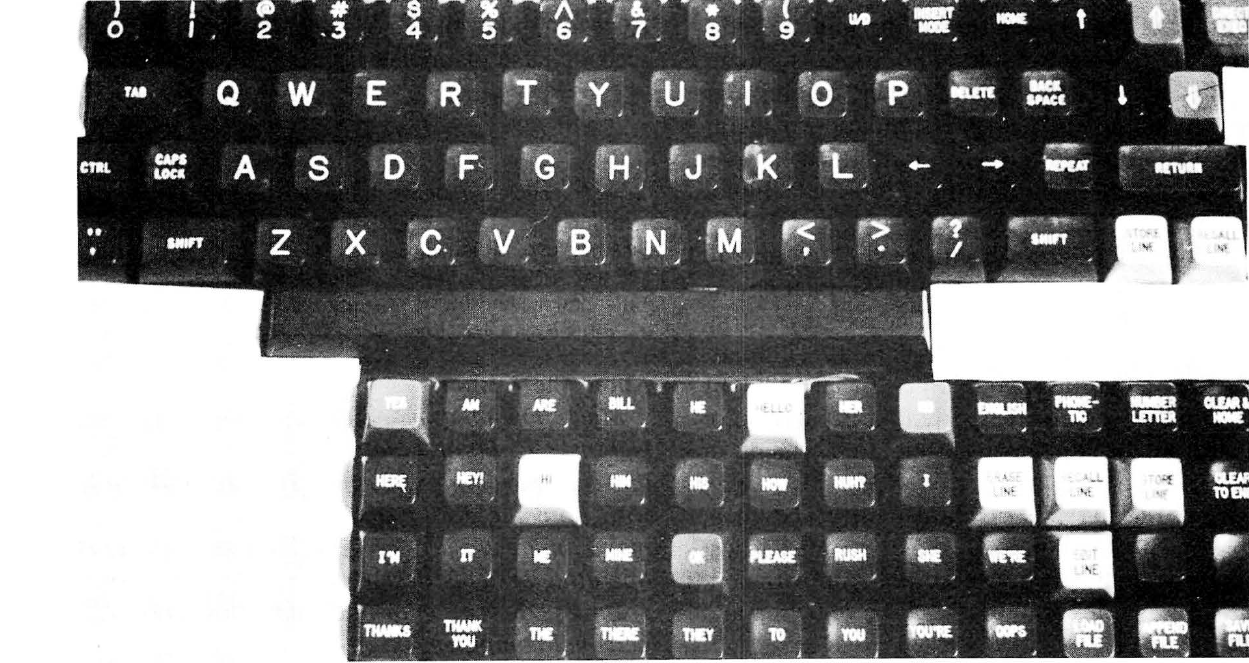

As mentioned before, the keyboard has a group of keys that have English words engraved on the faces (see photo 8). These keys work like regular keys in both the ENGLISH and PHONETIC modes by entering the whole word as if the user had manually entered it. In the NUMBER-LETTER MODE, depressing a key will cause the single word or phrase assigned to the key to be instantly vocalized. The letters of the alphabet and the numerals will also be vocalized when hit. This mode permits the synthesizer to be used as a spelling board. The DIRECT-EXEC-MODE works in a similar way, but causes the number keys on the keyboard to respond as lines on the screen. Thus, if ‘0’ is depressed, the word or phrase on line 0 on the screen would be spoken.

Dictionary Storage

My original design called for an 8080-based computer with 32 K bytes of programmable memory and a digital cassette deck running at 720 characters (bytes) per second. I later found that the cassette deck I wanted would not be available for twelve weeks. I did find a used North Star disk drive and controller that a friend was willing to sell for about the same price as the cassette drive. Since I do development work on my own iCOM minifloppies, I was able to write the custom software necessary to make the North Star disk I/O (input/output) subroutines look like the iCOM routines. The disk interface is used for two purposes in the system: to load and start the vocabulary management system and for large-scale dictionary storage. When the system is turned on, a dictionary file containing about two hundred words and phrases is automatically loaded into the memory workspace set aside for the dictionary. Later, the user may wish to load a specialized or customized dictionary. Since it would be wasteful to redundantly store the most frequently used words in each custom dictionary file, the desired file is simply merged with the existing in-memory dictionary. The merge is done alphabetically so that all new dictionary entries are brought in and put in order. The other kind of file load is called “APPEND. ” It works the same as a load, but first checks incoming words and phrases against the in-memory dictionary to see if they are already there. If found, the entry coming in from disk is ignored. I plan to add more features to the disk interface at a later date.

Using the Computalker

The phonemes used by the Computalker are shown in the box. The phonemes occur in one- or two-letter groups and may be separated by blanks, periods, commas, and question marks. A period causes a drop in pitch, a question mark causes the pitch to be raised slightly, and range from 1 (maximum stress) to 5 (minimum stress). No stress is equivalent to a stress of 0. The phonetic spelling of the first example (HELLO IM JOHN DOE) would look like this: HHIX3LOW1, AY3M JHAA2N DOH2W.

After installing the Computalker synthesizer and loading the CSRI program, I began to experiment with the phonetic spellings in an effort to learn how to make words come out intelligibly. After about an hour of playing I mastered most of the frequently used combinations and rarely had to refer to the list. On the average, I find that I have a success rate of about 75 percent on the first try at typing in a word phonetically. With regard to speed, about one second of computer time is required to process and set up three seconds of speech, prior to vocalization. The speed of voice output is not affected by the speed at which the text was typed in. All words come out at a consistent speed—that of normal speech. Once a word, phrase, or sentence is stored in the dictionary, recalling it may tak’e only two or three keystrokes, further improving the response time. The Computalker hardware is capable of excellent voice reproduction, although the CSRI program synthesizes speech with algorithmic techniques and has difficulties with some sounds and combinations of sounds. I have been working closely with Computalker Consultants to improve the voice quality. One advantage of the Computalker is that the phonetic translation is done in software rather than hardware. If a new and better version of the CSRI program comes out, the old version can be replaced at one tenth the cost of new hardware.

Other Capabilities

The synthesizer can work with a variety of input and output devices. This is because every potential user will have different needs and capabilities. The user could design a keyboard with only predefined words and phrases on it, one with just standard alphanumeric keys, or one with any other combination of keys. Thekeyboard designed for Bill has a standard alphanumeric layout plus a group of thirty-four word keys and a set of command keys. If the system were to be used by a person with poor coordination, a keyboard with one inch square keys could be designed and plugged in. The possible variations are unlimited. The other advantage of the interchangeable keyboard is upward compatibility. If someone buys a system and later decides that more features are desired, a change of keyboard would make the extra features available without having to replace the whole system. If the user of the system is a young child, the first keyboard may have only a few predefined phrases and words. When it is outgrown, the system does not become obsolete; the keyboard does. The child can learn how to use it a little at a time. Also, the labels on the keys do not have to be in English. Foreign languages, symbolic codes, or even pictures could be used.

The computer that runs the system may be used for other purposes besides voice synthesis. A variety of software is available for the 8080 microprocessor/ North Star combination. Some examples: educational game programs, computer-aided instruction, text editing/word processing, BASIC language interpreters, and so on.

Another use I have found for the synthesizer is foreign language translation. The English spelling of a word or phrase can be stored in the dictionary, and the foreign language phonetic spelling can be stored with it. When the word or phrase is recalled or typed in, the voice output will bc in the foreign language. Unfortunately, it is not capable of a full grammatical and sentence structure translation, but there are some interesting possibilities.

One idea came from a friend who is a registered nurse. She suggested that the translation might be useful in the emergency room of a hospital as a way of asking simple questions like “Where does it hurt?” in other languages. The response to many such questions would have to be gestures or a yes or no nod, but at least the questions could be asked.

Bionics and the Future

Bionics, by definition, is the study of systems whose functions are based upon living systems. It could be said that automobiles and airplanes are bionic devices because they simulate biological systems that have evolved in nature. I have named the voice synthesizer the “Bionic Voice” because that is exactly what it is. We generally think of the capabilities of the human body. The distinction between bionics and tools is vague. Tools are extensions to the human body created through technology. Bionics deals with the simulation of living systems. If we build a bionic arm and attach it to an amputee, do we call the new arm a tool or a bionic replacement?

Similarly, computers have been called extensions of the human mind. In many respects, they simulate living systems, and may one day be classified as a life form, yet we refer to them as tools. Perhaps the ultimate distinction between tools and bionics (when used by humans) is whether or not they extend a capability, or replace something that was lost due to accident or disease.

In this case, Bill has had cerebral palsy since birth. With the aid of high technology, replacements for lost functions can be built. Yet, we need not stop with conventional voice synthesis. We can make it better and more sophisticated.

Bill once said, “The bionic man can see fifty miles. With the Bionic Voice, I’ll be able to shout fifty miles.” In a sense, we can all do that right now. Radio, telephones, and television allow our voices to be heard at incredible distances. The difference is that we are using a tool to amplify and transmit our voice. With Bill, the synthesized voice is already in a form suitable for transmission. Our world-wide communications network will become his extended nervous system. Bill will have something that many journalists would love to have: the potential for a direct link into the wire services, the newsroom word processing equipment, and even radio or television.

With the addition of a text editor and text formatter program to the Bionic Voice computer, Bill could conceivably go out in the field, cover a story, edit, and transmit finished copy directly over phone lines, completely bypassing the pad and pencil step. The story is sent directly to the editor’s desk without intervening steps, making him the fastest reporter in the world. From responding with a yes or no nod of the head, Bill went to the slow, clumsy headstick for expression. Each revolution in communications has been a quantum leap forward for Bill. Now he will have the Bionic Voice. He will be able to speak to the world and the world will listen. I cannot begin to imagine what uses Bill will find for his new voice, but if past accomplishments are any indication of things to come, I want to be around in five or ten years to see the results of the seed we have planted.

References

Gerardin, Lucien, Bionics, trans, Pat Priban, (New York NY, 1968).

Rice, D Lloyd, “Friends, Humans, Countryrobots, Lend Me Your Ears”, BYTE, Number 12, August 1976.

Tanenbaum, Andrew S, Structured Computer Organization, Prentice-Halli lnc, Englewood Cliffs NJ 1976.

Added in 2024: Wikipedia: Speech Generating Device

Photo 2

Photo 3

Photo 4

Photo 5

Photo 6

Photo 7